In an era defined by skyrocketing digital experiences, services, and solutions – a trend that was sped up by the pandemic – guarding people’s identities in the digital realm is more important than ever. And as delivering both high security and a superior user experience has become a crucial challenge for digital solutions across industries, companies are turning to biometric authentication methods, including face recognition, for an accurate, fast, and easy-to-use method for digital identity verification. Although face recognition offers a number of compelling benefits relative to traditional authentication methods, its use elevates the importance of introducing sophisticated measures to protect against biometric fraud attempts.

In this article, we delve into the world of liveness detection – a technology that defends face authentication against physical presentation attacks. We’ll explore the significance, functionality, and real-world applications of liveness detection, review how liveness performance testing works, and put it in context of its counterpart for digital presentation attacks – deepfake detection.

Importance of Liveness Detection

In the realm of secure digital interactions, face recognition technology stands tall. Its accuracy, coupled with user convenience, makes it an ideal choice for identity verification. However, the technology can still fall prey to direct attacks, often referred to as “spoofs” or presentation attacks. As face recognition technology continues to revolutionize identity verification, it is vital to address the growing concern of presentation attacks, or “spoofs” whether they be physical (such as photo printouts, high resolution displays, or three dimensional masks) or digital (such as deepfakes or related digital face manipulations).

Liveness detection steps in as a crucial companion to face recognition to detect the presence of physical presentation attacks. By ensuring that a face image presented to an authentication technology is from a real, live person, liveness detection adds a necessary layer of security, especially for remote, unattended authentication.

Understanding Liveness Detection

Also referred to as “Anti-spoofing” or “Presentation Attack Detection,” Liveness detection, or “Liveness,” is a suite of technologies that aim to differentiate authentic users from fraudulent attempts by analyzing face images or videos with a combination of hardware and software. Liveness seeks to detect and / or protect against the use of physical presentation attacks, such as high resolution displays, printed photos, or 3D masks. Later, we will explore common types of attacks as well as the difference between them and digital presentation attacks (including deepfakes).

Detecting presentation attacks often requires an advanced combination of imaging and AI-based analysis to confirm the liveness of an authenticated face. Therefore, the success of liveness detection hinges on the seamless interaction between hardware and software, ensuring accuracy and usability are maximized. Liveness detection, like so many biometric processes, is a systems issue, not just a software challenge.

Choosing the Right Camera Technology

Liveness detection systems utilize various camera technologies. Specialized cameras, equipped with advanced sensors measuring depth, heat, or certain wavelengths of light, excel in accuracy and robustness, and can provide invaluable insights to liveness technologies. Due to their ability to use a multi-sensor approach to liveness, specialized cameras are ideal for use cases demanding the highest level of accuracy at high speeds, and where it is viable to deploy custom hardware. Typically, liveness detection for specialized cameras is deployed in enterprise physical access control, consumer air travel, payments and financial services, and automotive authentication – all applications where services are delivered through a terminal, kiosk, or embedded advice.

On the other hand, standard cameras are reliant on visible light only. These cameras can include smartphone cameras, web cameras, or standard IP cameras, and are often significantly more commonly deployed and more cost effective than specialized, multi-sensor cameras. Standard camera liveness – while being more challenging from a technological perspective – can deliver high performing liveness with the cameras and devices people already have in their possession, and is crucial for any remote enrollment or authentication scenario.

For this reason, liveness detection on standard cameras is the approach of choice for digital identity verification. While many smartphones have advanced, specialized cameras for liveness, they are locked down and enabled only for device-level access (rather than connected authentication). For example, Apple® Face ID® technology uses specialized cameras to deliver advanced liveness detection, but Face ID can only be used to locally authenticate a user to that device, and cannot be used in conjunction with digital identity verification for online (connected) authentication against a centralized identity repository.

Liveness Detection Methods for Digital Identity

Liveness detection mechanisms vary in the participation required from the user, and can roughly be classified into three paradigms: active, semi-active, and passive. Active liveness involves user participation in response to challenges like blinking, head movement, or smiling. Semi-active liveness might not ask for a specific movement from the user, but can project blinking lights or randomized colors onto a user’s face, or zoom a camera to detect depth information. The most advanced liveness technologies are able to conduct passive liveness, which requires no user involvement and provides the best user experience out of all liveness technologies.

Real-World Applications of Liveness Detection

Liveness detection is important in any digital identity scenario where security and convenience are paramount. Automated identification and authentication realms, such as digital access control, air travel, payments, government services, and digital identity verification, greatly benefit from liveness detection. Its integration mitigates the need for manual oversight of biometric authenticity in scenarios where human intervention is unfeasible or insufficient. From enabling digital passenger enrolments to securing online bank transactions, liveness detection can bolster trust and efficiency, and enable completely new user experiences.

The most critical use cases for liveness are those where authentication is fully remote and unattended; in attended applications, human oversight can easily detect the use of spoofs. For instance, a high resolution printout displayed full-frame to a camera may not be immediately obvious as a spoof to a selfie-based digital identity scheme (unless, of course, proper liveness detection is used). However, in an attended environment, it would be immediately obvious that a fraudster is holding the same picture in front of their face. It so happens, though, that digital identity most commonly is fully unattended, at least for enrollment, as the whole idea is to serve people in an automated fashion at their home or office. And with that mind, liveness is a critical consideration for most self-service digital identity applications.

How Liveness Detection is Benchmarked

ISO – the International Organization for Standardization – has codified liveness detection in ISO/IEC 30107 “Information technology – Biometric presentation attack detection.” ISO/IEC 30107-1 provides a foundation for understanding and defines key terms and concepts. ISO/IEC 30107-2 introduces a standard for data interchange. ISO/IEC 30107-3, which is the most commonly referred to part of this standard, defines a standard for test and evaluation of liveness (referred to in the standard as “Presentation attack detection,” commonly abbreviated as PAD).

ISO/IEC 30107 is foundational important to understanding liveness, but is also quite generic in nature. It does not detail specific threat levels, test methodologies, or acceptance criteria. It does define how liveness detection should be calculated, and in particular defines the following critical classification metrics:

- APCER – Attack Presentation Classification Error Rate – The rate at which a given system fails to properly detect a presentation attack, analogous to FAR (False Accept Rate) in biometric accuracy testing.

- BPCER – Bona Fide Presentation Classification Error Rate – The rate at which a given system fails to properly detect a bona fide image (e.g. a real face), analogous to FRR (False Reject Rate) in biometric accuracy testing.

- APNRR – Attack Presentation Non-Response Rate – The rate at which a given system fails to provide a response of any kind when an attack (spoof) is presented.

- BPNRR – Bona Fide Presentation Non-Response Rate – The rate at which a given system fails to provide a response of any kind when a bona fide (real) sample is presented.

In general, APCER is a measure of security (any misses could allow a spoof through), whereas BPCER and APNRR + BPNRR are measures of convenience (any misses could result in a retry or a requirement to proceed to a secondary channel).

Liveness Protection “Levels” and the Role of iBeta in Liveness Testing

The biometric identity industry commonly refers to the sophistication of physical presentation attacks through “Levels,” reporting for instance “ISO/IEC 30107-3 Level 1” compliance. However, it should be noted that these levels are not actually defined in the ISO standard itself, and is something that the industry has come to accept as related to but outside of the standard.

With its NVLAP accreditation and broad use by biometric vendors across varying modalities and use cases, iBeta has become a leading certification body for ISO/IEC 30107-3, and has established a three-level hierarchy of presentation attack sophistication.

iBeta’s approach is accepted as a de facto standard and the framework of choice for analysis across varying applications. It includes three levels of presentation attacks, summarized here:

Level 1

- Expertise required: none; anyone can perform these attacks.

- Equipment required: easily available.

- Presentation attack instruments: a high resolution photo or printout of the face image, mobile phone display of face photos, hi-def challenge/response videos.

Level 2

- Expertise required: moderate skill and practice needed.

- Equipment required: available, but requires planning and practice.

- Presentation attack instruments: video display of face (with movement and blinking), paper masks, resin masks (targeted subject), latex masks (untargeted subject).

Level 3

- Expertise required: extensive skill and practice needed.

- Equipment required: specialized and not readily available.

- Presentation attack instruments: silicone masks, theatrical masks.

iBeta maintains a list of Level 1 and Level 2 compliant vendors here. Note that while this webpage includes high-level compliance letters, full evaluation reports with detailed analysis are not posted and must be obtained from vendors themselves.

The Role of NIST FATE in Liveness Testing

For years, the National Institute of Standards and Technology (NIST) has played a critical role in the evaluation of biometric technologies, in particular testing the accuracy of leading face recognition technologies in their FRTE series of benchmarks. In 2023, NIST added testing for face recognition liveness in the form of the NIST Face Analysis Technology Evaluation (FATE) Presentation Attack Detection (PAD). For a detailed description of NIST FATE PAD, please see Paravision’s blog post on NIST FATE PAD.

NIST FATE PAD is characteristically different from iBeta. Whereas iBeta is a live, operational pass / fail test of a given liveness (PAD) solution (which typically includes both software and a target hardware platform), NIST FATE PAD is a static test of certain types of presentation attack detection software (specifically those that can handle single frame, RGB face images). Because it is live, iBeta covers a smaller breadth of subjects than NIST. Meanwhile, NIST publishes specific accuracy metrics across different attack vectors in a leaderboard format, comparing the relative results of all participants, and does not offer pass / fail results.

The Role of FIDO in Liveness testing

The FIDO Alliance standardizes certain approaches to online authentication, including biometric authentication, and is most commonly used by online service providers who leverage on-device biometrics for connected services. FIDO has set a standard for biometric matching and presentation attack detection. FIDO liveness (PAD) testing is completed by various test groups (which includes iBeta, BixeLab, and others). To be clear, iBeta provides ISO 30107-3 testing according to its own criteria as well as FIDO testing according to FIDO’s criteria.

FIDO has established various levels of PAD with their Level A and Level B similar to iBeta’s ISO 30107 Level 1 and Level 2 PAD described above. Akin to ISO’s APCER, FIDO uses “IAPAR” – Imposter Attack Presentation Attack Rate. For FIDO liveness testing, a vendor’s solution must deliver accuracy better than the rates specified in FIDO’s documentation.

As can be seen, one of the significant benefits of FIDO PAD testing is the extensive documentation for test protocol and reporting. At the same time, the FIDO testing generally has more relaxed requirements than iBeta testing, allowing for instance up 7% IAPAR whereas iBeta’s own test criteria requires a 0% APCER. Vendors, integrators, and end users should be keenly aware of the different requirements across these different tests.

The Role of DHS S&T RIVTD in Liveness Testing

Since 2018, the U.S. Department of Homeland Security Science and Technology Directorate (DHS S&T) has repeatedly hosted the Biometric Technology Rally, which is targeted at understanding operational performance of biometric acquisition and matching systems. The Rally evaluations have led to extensive reporting on biometric accuracy, especially regarding fairness across demographics. Like NIST, DHS S&T reports on specific performance of biometric systems, stack-ranks anonymized vendors (who can subsequently self-declare their code names), and compares them with varying target thresholds. Full information can be found at https://mdtf.org/.

Evolving from the Rally tests, which focused acquisition and matching systems primarily targeting travel and border management applications, DHS S&T recently announced a series of Remote Identity Validation Technology Demonstration (RIVTD) tests targeting digital identity use cases. RIVTD includes three tracks:

- Track 1: Document Validation

- Track 2: Match to Document

- Track 3: Face Liveness

Following on the extensive reporting from all former DHS S&T biometric testing, it is expected that Track 3 will provide highly valuable insights into various aspects of face liveness in a manner that is complementary to iBeta, NIST FATE PAD, or otherwise.

The Risk of Injection Attacks

Liveness detection is often discussed alongside “injection attack” mitigation. So what are injection attacks? The basic concept is that a fraudster takes a (seemingly) authentic video of a subject capturing a selfie and “injects” this video into a camera capture video feed, taking the place of what the camera might otherwise deliver. If the liveness detection mechanism works on a standard video feed, it may perceive the video as “live” as there is no physical presentation attack. Such an attack is much more straightforward to accomplish in the case of (e.g.) desktop webcam capture as opposed to iOS / iPhone capture, as in the first it is easy to mimic a video feed and insert fraudulent data whereas in the latter this is extremely difficult.

As solution providers look to offer more open / cross-platform authentication capabilities, injection attack mitigation should therefore be a key part of system design. There are multiple approaches to mitigating injection attacks, whether as part of the face imaging process itself or surrounding the payloads and systems interfaces. There is no one “right” answer, but rather a variety of approaches that can complement different technical, UI/UX, or policy considerations.

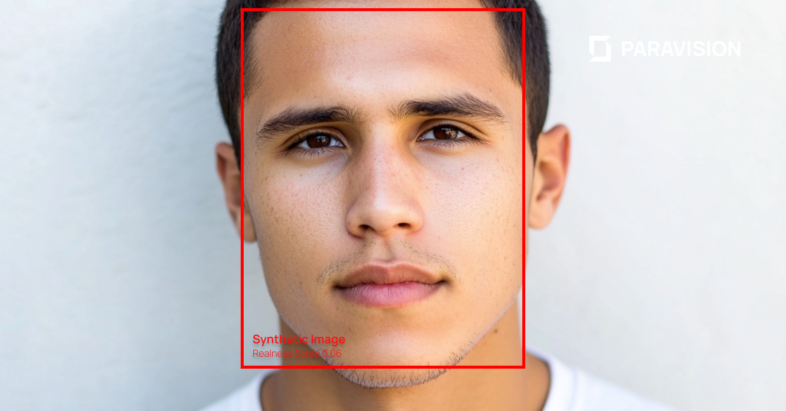

Liveness Detection vs. Deepfake Detection

The dramatic rise of visually compelling synthetic face and deepfake generation in recent years should be a major consideration for any solution provider deploying a modern digital identity system. While the focus of this article is not to explain deepfakes in general, it is critical to understand the difference between deepfake detection and liveness detection, as both are avenues for fraud in digital identity.

In short:

- Liveness detection is targeted at the detection of physical presentation attacks, such as face masks, high resolution displays, or printouts.

- Deepfake detection is targeted at the detection of digital presentation attacks, such as identity swaps, expression swaps, or otherwise.

These could be layered, of course. A fraudster could use a deepfake generator to make a face appear to be contextually correct, and then use a high resolution display to present that deepfaked face to a capture system. For this reason, digital identity systems should have mechanisms to protect against or detect both physical and digital presentation attacks. It should be noted that deepfakes and injection attacks have an inherent connection as in many cases a deepfake would be created through an ancillary piece of software and (mimicking a camera) injected into a system in order to complete the fraudulent attempt. Deepfake detection could thus be layered with injection attack protection, or actually serve as a key part of the injection attack protection itself.

Paravision’s Approach to Liveness Detection

To help partners navigate a landscape with increasingly sophisticated digital identity threats, Paravision is happy to offer Paravision Liveness.

By combining passive liveness with live image quality feedback, Paravision sets a new standard in user friendly, high-accuracy liveness detection. Optimized for mobile capture with SDKs for iOS and Android, with server-side liveness detection, Paravision Liveness provides a secure liveness detection option for digital identity verification partners.

While optimized for deployment with Paravision’s advanced image quality checks, Paravision Liveness works on single faces, making it ideal for fully integrated or API-based digital identity systems. It also layers naturally with deepfake detection and injection attack mitigation techniques.

In addition to the liveness detection for standard cameras, Paravision Liveness-Embedded provides a highly accurate liveness detection product suite for embedded devices with multi-sensor cameras. Similar to Paravision Liveness, our Liveness for Specialized Cameras enables a fully passive experience, enabling advanced protection without compromising user experience.

To learn more about Paravision’s highly accurate, user friendly, and easy-to-deploy liveness detection product suite, read more at https://www.paravision.ai/liveness/.

More News

More News