On September 20, 2023, the National Institute for Standards and Technology (NIST) published its latest Face Analysis Technology Evaluation (FATE) report – NIST IR 8491 – which is focused on characterization of Presentation Attack Detection (PAD) technologies. Here, we will break down the terms of art, test criteria and results to help decode this rich but complex report.

NIST FATE Background

Recently, NIST restructured and renamed its long-standing FRVT tests, separating tests into those focused on face recognition technology (as FRTE) and those focused on face analysis technology (as FATE). For more detail on the change, please see our blog post here. In any case, this most recent report makes sense of the name change: Presentation Attack Detection is not face recognition, but it is a type of analysis on face attributes, and therefore is perfectly suited to the FATE category.

Presentation Attack Detection (PAD) Background

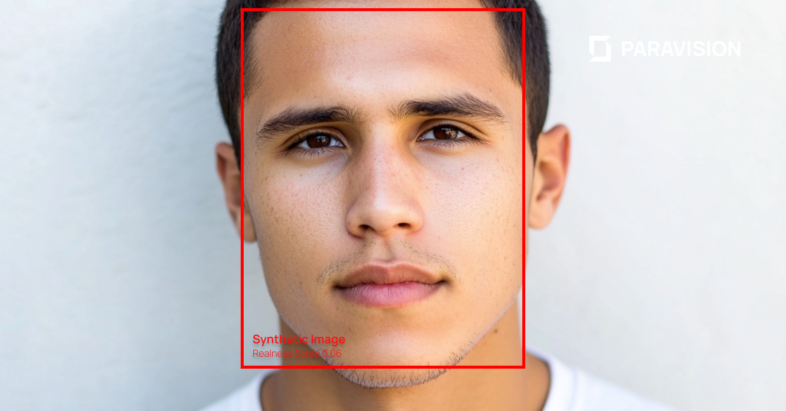

Presentation Attack Detection (PAD), which has been standardized by ISO 30107, is more commonly referred to as anti-spoofing or liveness detection. In short, it is technology that attempts to determine if a presented face is authentic, or if there is some attempt to falsify a face or otherwise subvert a face recognition system. For more detail on PAD, please see Paravision’s white paper An Introduction to Presentation Attack Detection. NIST FATE PAD is the first report by NIST characterizing this type of technology.

What’s in scope, what’s out of scope for NIST FATE PAD

NIST FATE PAD specifically is looking at passive PAD systems that rely on standard RGB images or videos from standard RGB cameras. It does not include active systems that require specific interaction with a UI, specific illumination patterns or otherwise. It also does not include other approaches to PAD that require specialized hardware such as near-Infrared cameras or illumination, depth sensing, or otherwise.

NIST FATE PAD looks at two types of interactions: impersonation and evasion. Impersonation covers the case where someone is trying to fraudulently access a system with a recreation of another person’s face. Evasion covers the case where someone is trying to avoid their face being matched.

In each case, the report looks at the performance of submitted software against 9 different types of presentation attack (PAs). While the report indicates that these types are all based on well-known, public domain PAs, they are not described in detail, and in some cases not all. The report includes high-level descriptions of PAs when multiple vendors delivered good results, but does not include any description for PAs that were not successfully detected by multiple vendors. So, for instance, the report indicates that “PA Type 3” is a “flexible silicone mask” and that “PA Type 8” is a “photo print / replay attack,” but it does not include any description at all for PA Type 1, 2, 4, 5, 7, or 9.

Terminology Used

Presentation Attack Detection has its own terminology as articulated in ISO 30107 and used in NIST FATE PAD. The key terms which may not be familiar to readers are:

- APCER – Short for Attack Presentation Classification Error Rate, this metric looks at the rate of presentation attacks (a.k.a. spoofs) being wrongly accepted by a system. A reasonable proxy for APCER is False Accept Rate, i.e., when a sample shouldn’t pass for some reason but does.

- BPCER – Short for Bona Fide Presentation Classification Error Rate, this metric looks at the rate of authentic faces being wrongly rejected by a system. A reasonable proxy for BPCER is False Reject Rate, i.e., when a sample should pass but for some reason does not.

A security-oriented system is typically configured to minimize APCER, whereas a convenience-oriented system is typically configured to minimize BPCER. This fact is utilized throughout the results portion of NIST FATE PAD.

Test Results

The test results are primarily presented as a series of tables that show the scores for the top performers for each PA type. Within each table, results are shown for both the convenience-oriented configuration (where BPCER is fixed to 0.01 and the APCER is variable) as well as the security-oriented configuration (where APCER is fixed to 0.01 and the BPCER is variable).

Important Note: There is no single “leaderboard” metric in NIST FATE PAD. In other words, there is no single identified use case or presentation attack that is viewed as a primary metric. In addition, there is no test that shows aggregate accuracy across multiple PAD types. This is in contrast to NIST FRTE, which delivers metrics on a use case-basis (e.g., comparing travel visa images and border crossing images).

Rather, the results of NIST FATE PAD are presented in a PA Type-by-PA Type series of tables. As opposed to addressing a given use case (e.g., remote ID verification or self-service travel), the report helps to understand performance against select presentation attack types. This breakdown highlights both the complexity of PAD as well as the broad variability in performance from one PA type to another. The number of tables presented is further multiplied by measurement of both impersonation and evasion types.

In summary, the report results include tables summarizing the top 10 accuracy leaders:

- By 9 different presentation attack types

- By thresholds set for convenience vs. security use cases

- By impersonation and evasion use cases

In addition to these “top 10” tables, full accuracy analysis is provided for all submissions later in one of the report appendices. This includes Detection Error Tradeoff (“DET”) curves that show a more complete relationship between APCER and BPCER across the full sweep of thresholds (as opposed to the single APCER = 0.01 / BPCER = 0.01 thresholds summarized in the results tables).

Finally, the report delves into certain operational considerations, including the use of video vs. still imagery and the potential value of fusing the results from multiple PAD submissions.

Interpreting Report Results

Here, we will not be providing an interpretation of the results. For those who are interested in an interpretation, we recommend the following approach / considerations:

- Assess whether impersonation or evasion (or both) is the key criteria for a given use case.

- Create summary tables that look at performance across the range of presentation attack types reported.

- Look for trends across the various presentation attack types, rather than outstanding performance in one given category, as real world usage will entail a wide variety of presentation attacks.

In addition, while rankings can be useful, it is recommended that true accuracy rather than vendor rank be considered as the key metric, as the accuracy fall off for certain presentation attack types is quite severe, even among the top-ranked accuracy performers.

For more information or insights into NIST FATE PAD, NIST FATE / FRTE in general, or Presentation Attack Detection more broadly, please do not hesitate to reach out to us at [email protected].

More News

More News