Why Inclusion Matters in Identity AI

By Kamil Chaudhary, General Counsel and SVP of Data Acquisition, Paravision

As AI continues to reshape how we verify identity, board a plane, or open a bank account, there’s a question that deserves constant attention: does this technology work for everyone? At Paravision, we believe the answer must be yes. Not sometimes, not statistically on average, but across the full spectrum of demographics that make up our global community. That’s why inclusion remains a cornerstone of how we build and evaluate our technologies—not just a principle, but a performance goal.

Over the past year, we’ve seen remarkable progress in Identity AI. Face recognition accuracy has reached new highs. Deepfake detection is no longer experimental, it’s essential. Liveness detection has become smoother and more secure. But amid these innovations, one thing hasn’t changed: systems that don’t perform well for everyone create risk, erode trust, and ultimately fail in the real world.

To ensure that doesn’t happen, we hold ourselves to the toughest standard: worst-case performance. That means measuring not just average accuracy, but how well our technology works for the most challenging demographic group. Imagine three fences: one that favors certain groups and leaves others behind (high demographic

differentials), one that treats all groups equally but performs poorly overall (low bias but high error),

and one where the fence has been removed altogether—delivering high accuracy for everyone. That last scenario is our goal.

Rather than simply minimizing disparity between groups, we aim to minimize error for all groups. This means focusing not just on fairness, but on excellence—ensuring our systems work equitably and effectively across all demographic groups without compromising on performance. It’s how we move beyond bias mitigation to true inclusion.

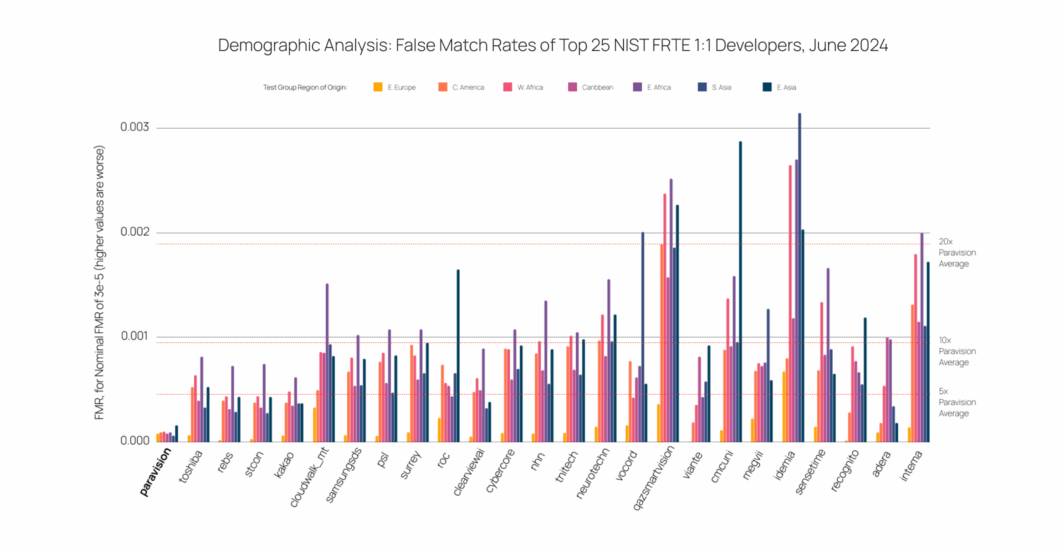

In the NIST FRTE 1:1 evaluation—the industry’s most rigorous demographic benchmark—the worst case scenario is measured by FMR Max, or Maximum False Match Rate, which measures the highest rate at which a face recognition system incorrectly matches two different individuals within a single demographic group. It reflects the system’s worst-case accuracy and is a critical metric for evaluating whether the technology performs fairly and reliably across all populations. Paravision achieved a best-in-class FMR Max of just 0.00086 in NIST FRTE 1:1, dramatically outperforming competitors. For context, at the time of the submission, the next-best vendor had an FMR Max more than 5 times higher, and many vendors in the top 25 showed FMR Max scores up to 24x worse.

FMR Max, or False Match Rate Maximum, is the most important metric when evaluating inclusion. Unlike overall accuracy scores that can obscure bias, FMR Max reveals how the technology performs for the single worst-case demographic group. It’s a window into whether your system truly treats all people equally—and a critical number for sectors like banking, border control, and digital identity where even a few false matches can cause real harm. But we also know inclusion isn’t something you claim once and move on from. It’s something you prove continuously. It’s why we train on broad, balanced datasets. Why we prioritize transparency through public benchmarks. And why we collaborate with partners who care about not just what a system can do, but who it serves.

For us at Paravision, inclusion is not the finish line, it’s the foundation.

As regulations tighten globally and public expectations rise, we invite our partners and peers to make inclusion part of their own performance standard. Because whether you’re building a national identity program, a financial onboarding flow, or a smart access system, who your technology recognizes, and how well it recognizes them, matters.

At Paravision, we’ll keep doing the work to ensure that AI doesn’t just perform well, but performs fairly. Because when we get inclusion right, we build systems that are not only more ethical—but more effective, more scalable, and more trusted.

Read our Inclusion in Face Recognition white paper for more detail: https://www.paravision.ai/resource/inclusion-in-face-recognition/.

Kamil Chaudhary is General Counsel and SVP of Data Acquisition at Paravision, and leads the company’s public policy.